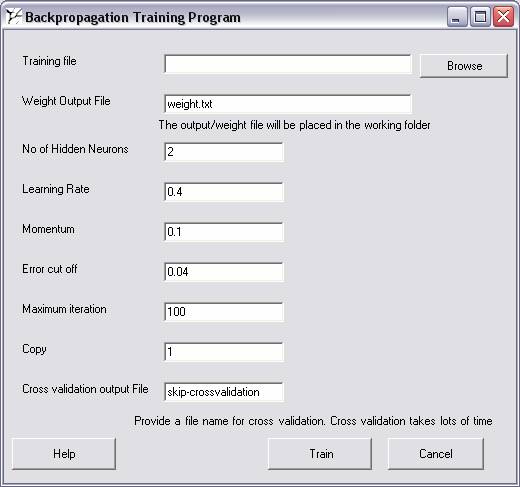

Training program: This program trains the network. Clicking on the Training button will produce a window shown below.

Training file: Use the “Upload” button to select the file to be trained. The program is very sensitive to formats. The format is depicted by an example

Samples 4

Parameters 2

Sample1 0 0 0

Sample2 1 0 1

Sample3 1 1 0

Sample4 0 1 1

NOTE: The delimiter can be space or tab

The first line should contain the word “samples” or any other word followed by the total number of samples. Here in this case it is 4.

The second line should contain the word “parameters or any other word followed by the total number of input parameters (this will be also taken as the number of input neurons). In this case it is 2

From third line onwards the first word should contain sample name (Sample name should be one word). Second column should be the expected output. (It can be a decimal number. Preferably provide it as 0 or 1). Rest others in the row are the inputs to the system. It can be binary e.g. 0 and 1 or it can be decimal as 0.02. The program will give optimum results only when the data range is between 0 and 1.

If your data has samples as columns and parameters as rows you can use the utility programs “Format Data”. If your data is a gene-expression data then also use the utility program “Format Data”. If your data is of DNA sequences use the utility tool “Format sequence data”. These utility tools will easily convert your data to the above mentioned format.

Output file: Provide an output file name. All the information of the training in the form of weights will be saved in this file. When using the testing program you will need to upload this file. This will be saved in the working directory

No of hidden neurons: This denotes the total number of hidden neuron the network. Ideally it should be less than the number of input neurons. The best result is available by taking the number of hidden neuron as square root (rounded to the nearest integer) of the number of input neurons. If the numbers of hidden neurons are very less, it is possible the system will never learn. However on the other hand if the number of hidden neurons is very high, it may lead to better learning but behave poorly in generalization capacity. In other words, it will perform very well on the training sets but fail on the independent (testing) set.

Learning rate: Learning rate decides the speed by which the network will learn. A very small learning rate may take an infinity time for the network to learn. On the other hand a very high learning rate may lead to error bumping and the network not being trained at all. Don’t change the default value if you are an inexperienced user.

Momentum: During the training process, times may come when the error convergence reaches a local minima and no further convergence takes place. Placing a momentum helps it come out of the local minima and proceed to the global minima.

Error cut-off: Error reduces with iteration and the program will stop execution once it reaches the error cut-off or the maximum number of iteration.

Maximum iteration: The total number of training cycles performed by the program. However if the error reaches below error-cut-off, the program will stop.

Copy: The program makes a copy of the

dynamic changing weights after copy number of iteration. So if you break the

program in between you will get the previous copy of the weights.

Cross validation output file: By default the system does not perform any cross validation. If you provide a file name, the system will perform cross-validation. The system performs a leave one out cross-validation and is a very computationally intensive process. Rather you are advised to perform-cross validation only when you plan to get the efficiency of your system. Cross-validation may take from days to month depending on the size of data.

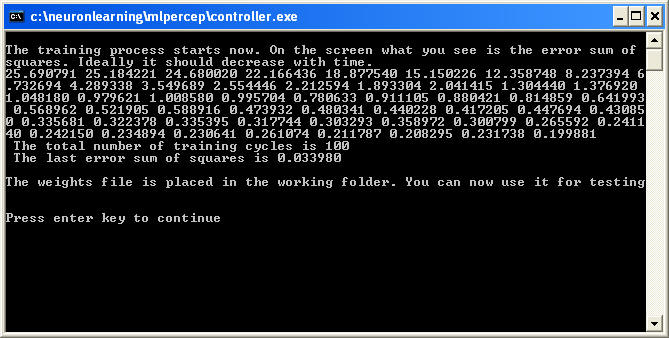

Click on train and a dos window will open up. You will see some values popping on the dos screen.

These values are the error sum of squares. Ideally it should decrease with time indicating the fact that the network is learning. It will stop when it will reach the minimum error cut off or the maximum iteration is reached. If you see that the error is not further reducing, you can close the dos window and the last set of weights will be taken for further calculations. However if you have opted for cross-validation closing the dos window will lead to partial calculation and you will get the results only up the number of cross-validation completed by the system.